Learning Cloth Dynamics: 3D+Texture Garment Reconstruction Benchmark

Meysam Madadi, Hugo Bertiche, Wafa Bouzouita, Isabelle Guyon and Sergio

Escalera

Proceedings of the NeurIPS 2020 Competition and Demonstration Track,

PMLR 133:57-76, 2021.

Human avatars are important targets in many computer applications.

Accurately tracking, capturing, reconstructing and animating the human body, face and garments in 3D

are critical for human-computer interaction, gaming, special effects and virtual reality. In the

past, this has required extensive manual animation. Regardless of the advances in human body and

face reconstruction, still modeling, learning and analyzing human dynamics need further attention.

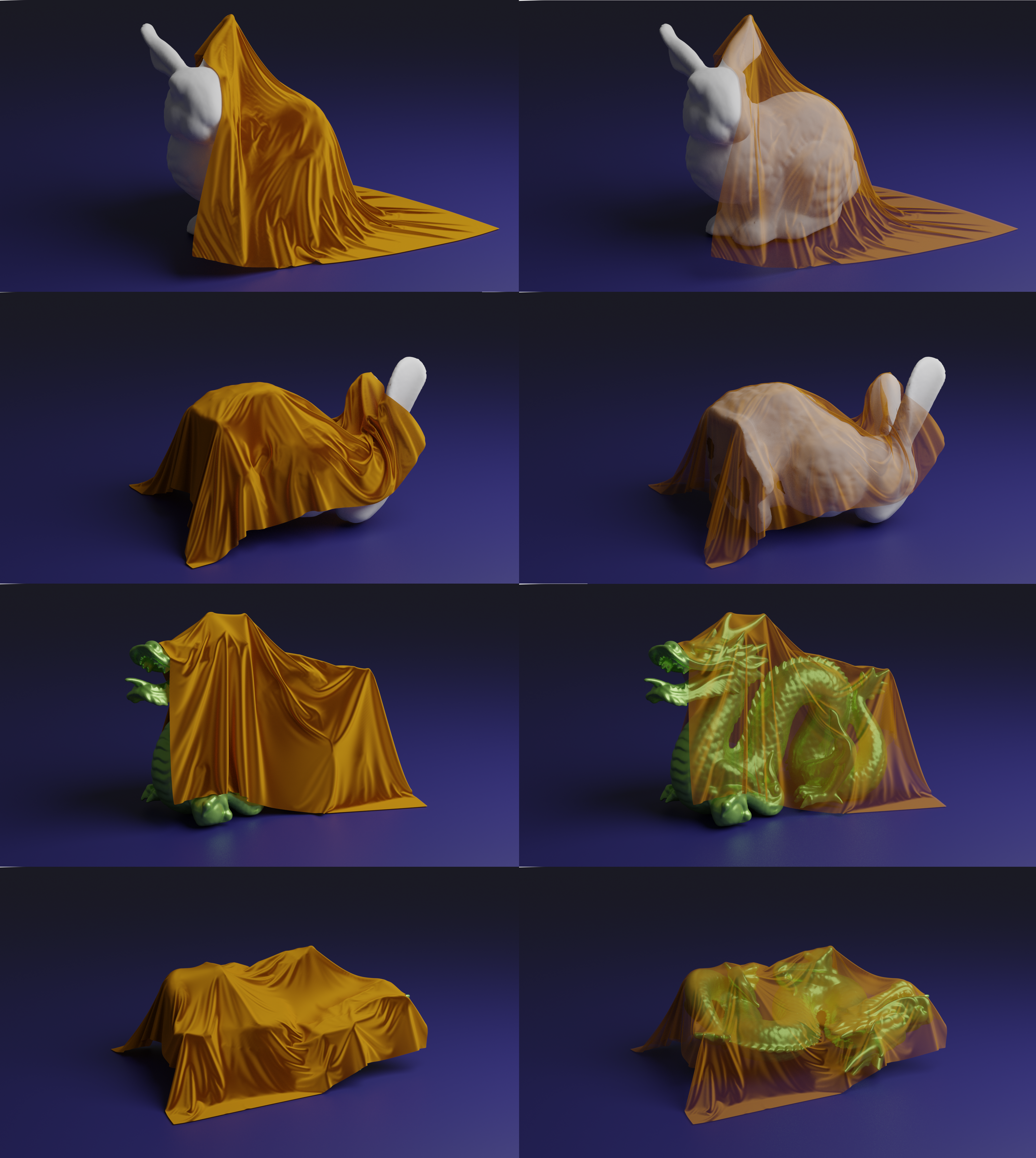

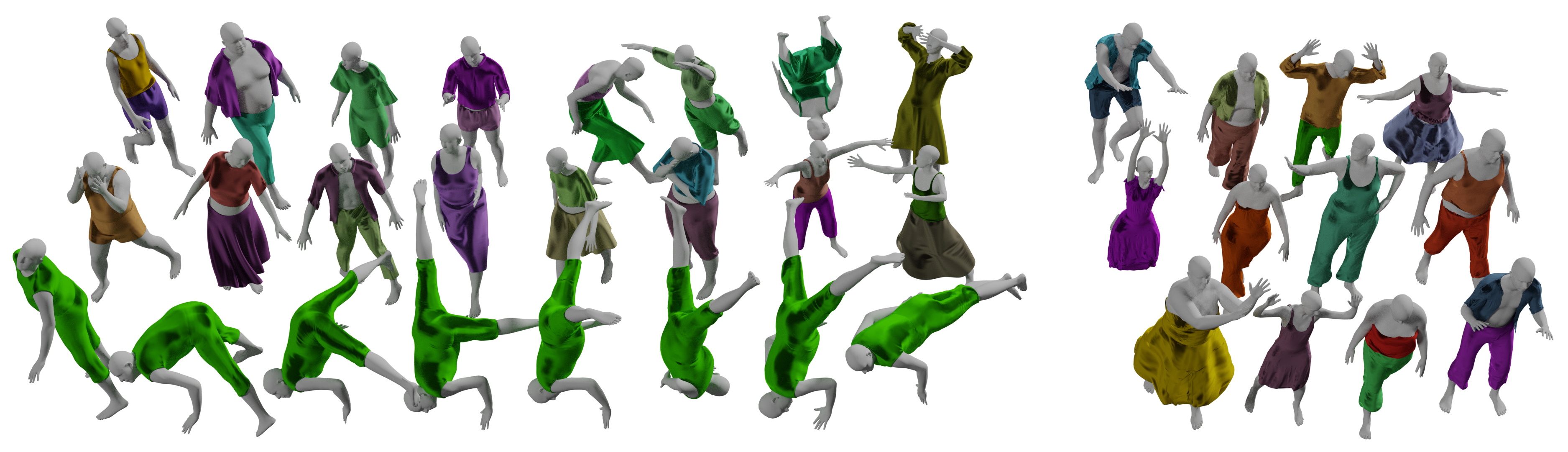

In this paper we plan to push the research in this direction, e.g. understanding human dynamics in

2D and 3D, with special attention to garments. We provide a large-scale dataset (more than 2M

frames) of animated garments with variable topology and type, calledCLOTH3D++. The dataset contains

RGBA video sequences paired with its corresponding 3D data. We pay special care to garment dynamics

and realistic rendering of RGB data, including lighting, fabric type and texture. With this dataset,

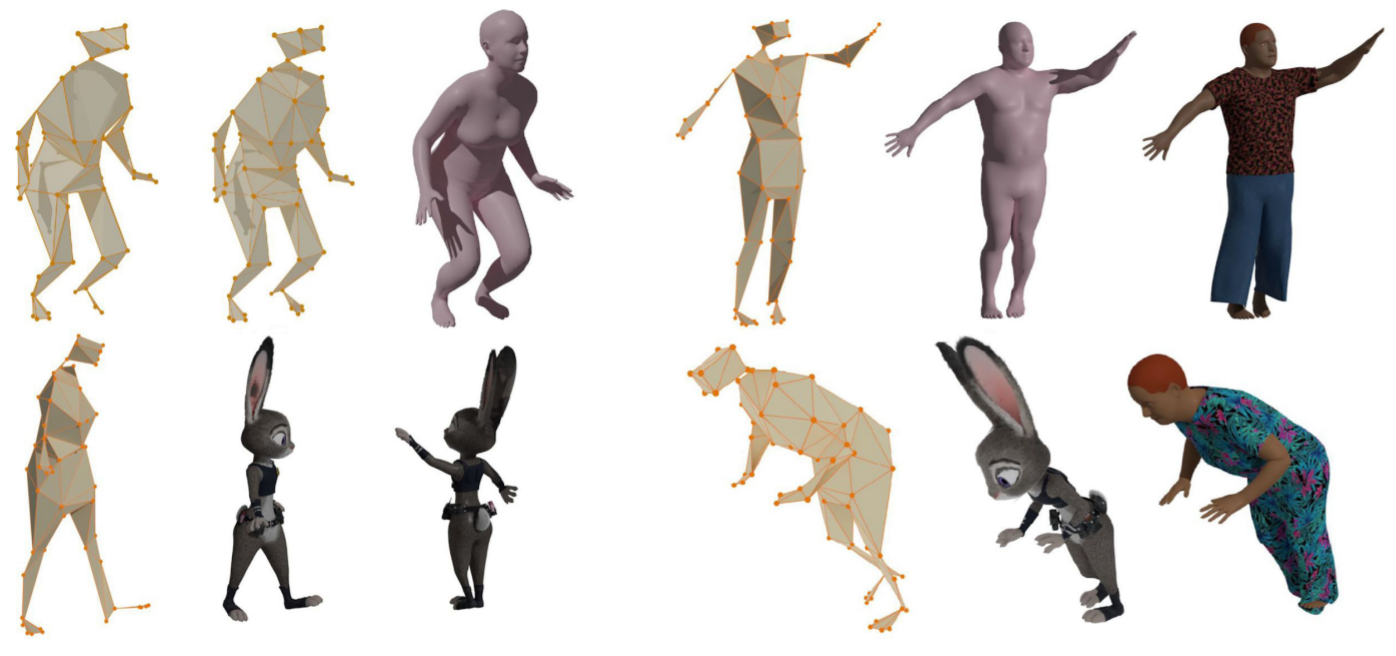

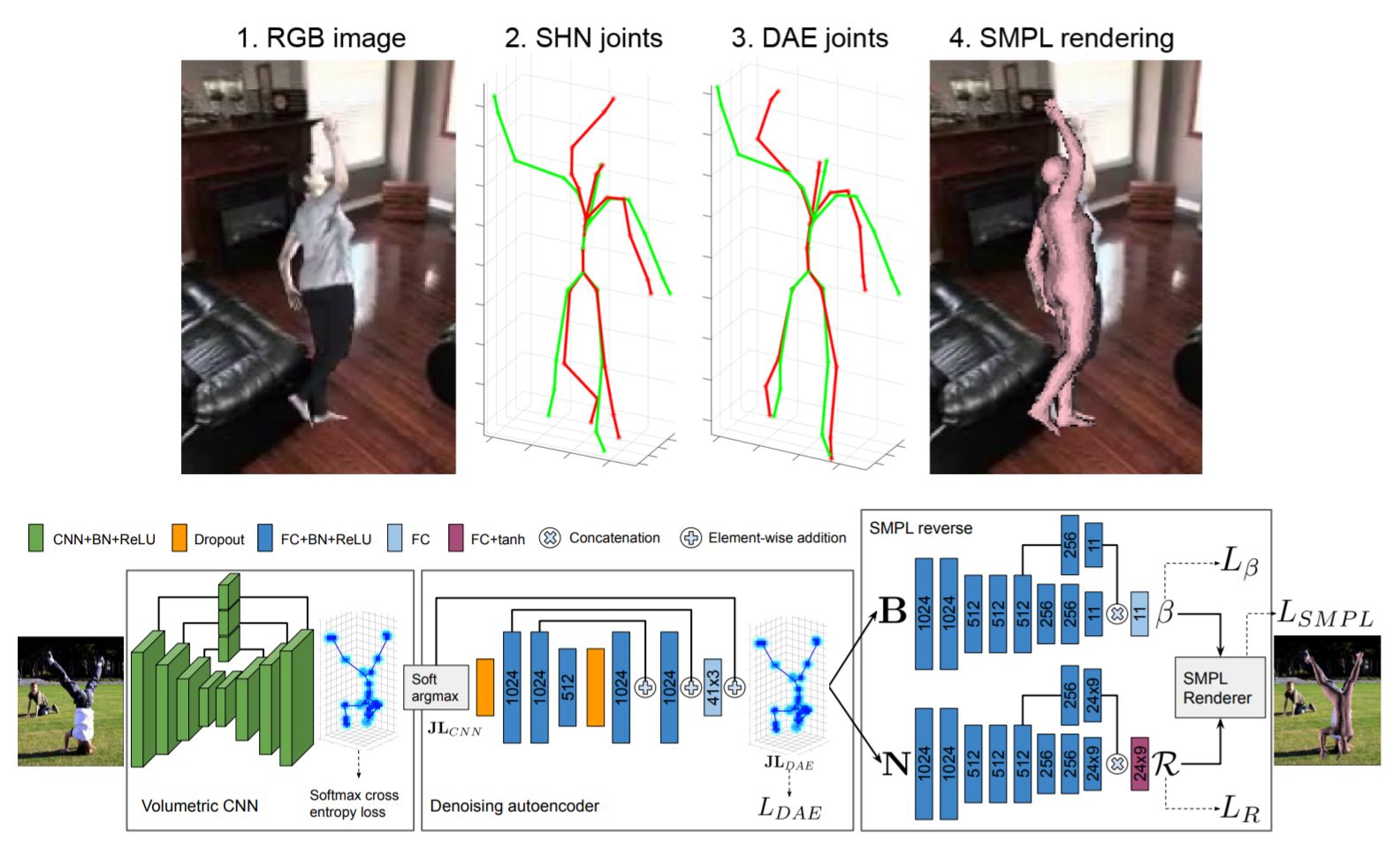

we hold a competition at NeurIPS2020. We design three tracks so participants can compete to develop

the best method to perform 3D garment reconstruction in a sequence from (1) 3D-to-3D garments, (2)

RGB-to-3D garments, and (3) RGB-to-3D garments plus texture. We also provide a baseline method,

based on graph convolutional networks, for each track. Baseline results show that there is a lot of

room for improvements. However, due to the challenging nature of the problem, no participant could

outperform the baselines.

[Bibtex]

@InProceedings{pmlr-v133-madadi21a,

title = {Learning Cloth Dynamics: 3D+Texture Garment Reconstruction Benchmark},

author = {Madadi, Meysam and Bertiche, Hugo and Bouzouita, Wafa and Guyon, Isabelle and

Escalera, Sergio},

booktitle = {Proceedings of the NeurIPS 2020 Competition and Demonstration Track},

pages = {57--76},

year = {2021},

editor = {Escalante, Hugo Jair and Hofmann, Katja},

volume = {133},

series = {Proceedings of Machine Learning Research},

month = {06--12 Dec},

publisher = {PMLR},

pdf = {http://proceedings.mlr.press/v133/madadi21a/madadi21a.pdf},

url = {https://proceedings.mlr.press/v133/madadi21a.html},

}